What if I told you that everything on the internet is generated by AI? From the videos you watch to the text you read, none of it is real, all of it simulated. It’s a scary thought, isn’t it? That our entire digital reality could be nothing more than an elaborate computer program. But what if it’s not just a theory? What if there is evidence to support this claim?

In January 2021, this theory, dubbed "The Dead Internet Theory” was shared by anon posters from the image board 4chan. The TL;DR of the post reads:

“Large proportions of the supposedly human-produced content on the internet are actually generated by artificial intelligence networks in conjunction with paid secret media influencers in order to manufacture consumers for an increasing range of newly-normalized cultural products”

With the last few months of breakthroughs and hype around AI, it’s becoming clear that this theory might turn into reality very soon. Corporations and state actors have already been known to shape public opinion via fake grassroots efforts, and the ability to fully automate these efforts will not go unrealized. Breakthroughs in deep fake technology allow people to generate videos and voice recordings impersonating people with ease.

Once you have AI-generated content, amplifying the social signal through a botnet or by simply purchasing likes/plays/views is a simple task.

In this article, we’ll explore some of the innovations and examples in AI across Text, Image, Audio, Video, and Music, and what it means for the future of the internet. Specifically exploring the idea of a simulated internet, one where the majority of content is generated by AI.

The Rise of Generative AI

Text

Within the text domain, OpenAI’s GPT-3 is generally one of the better text models out right now. Although recently the Chinese government-backed Beijing Academy of Artificial Intelligence launched Wu Dao 2.0, the largest language model to date. With 1.75 trillion parameters, the model has exceeded state-of-the-art levels on nine benchmarks), which test things like knowledge comprehension.

Now of course the main issue is that these models are closed source and gated behind APIs with terms of service. While not as good as the closed-source models, GPT-J is a great alternative and offers comparable performance.

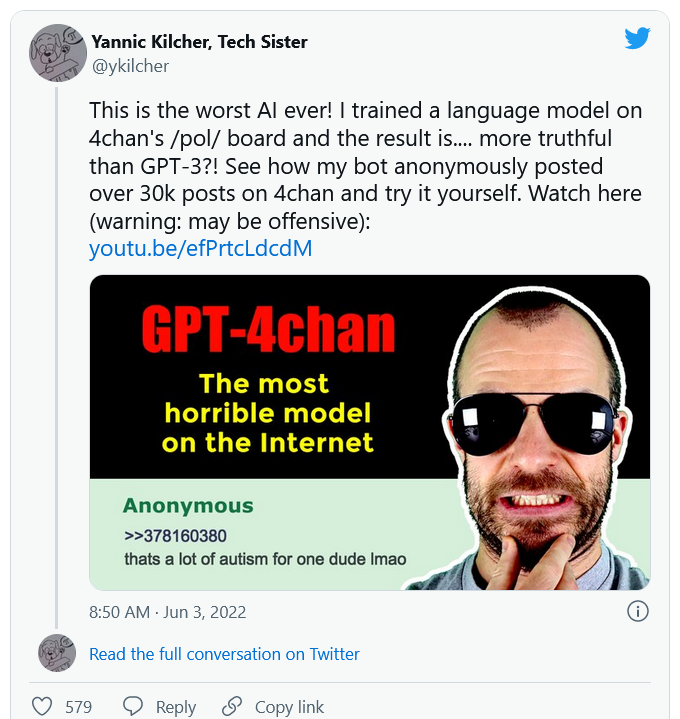

We’ve already seen an example of a YouTuber taking the GPT-J model to create a 4chan bot that interacted with users on 4chan. Naturally, this received a ton of media and public backlash, and yet the question remains, how often does the output of these bots go unnoticed?

The chatbots on Character AI are also quite impressive and capable of responding reasonably well to questions. Illustrating that a text-based social network completely populated by bots isn’t too far-fetched. In fact, you can already see some of the results of this in the SubSimulatorGPT2 subreddit. There have even been reports of men falling in love with their AI chatbot girlfriends.

Image

Image generation is another area that has seen significant progress in the past year. The release of DALL-E, an AI program that can create images from textual descriptions, showed just how far generative AI has come. These systems are designed to create new content, rather than simply recognize and categorize existing content.

However, similar to GPT3, access to DALL-E is restricted and heavily filtered. As a result, people have flocked to Stable Diffusion, an open source alternative. Aumatic1111 arguably has the best open source web UI for Stable Diffusion, integrating a lot of best practices and features in the image generation space.

A recent project that highlights the potential for image generation is Avatar AI, which creates great looking avatars based on an initial input image. Paired with This Human Does Not Exist and an individual could easily spin up thousands of human-looking accounts.

Audio

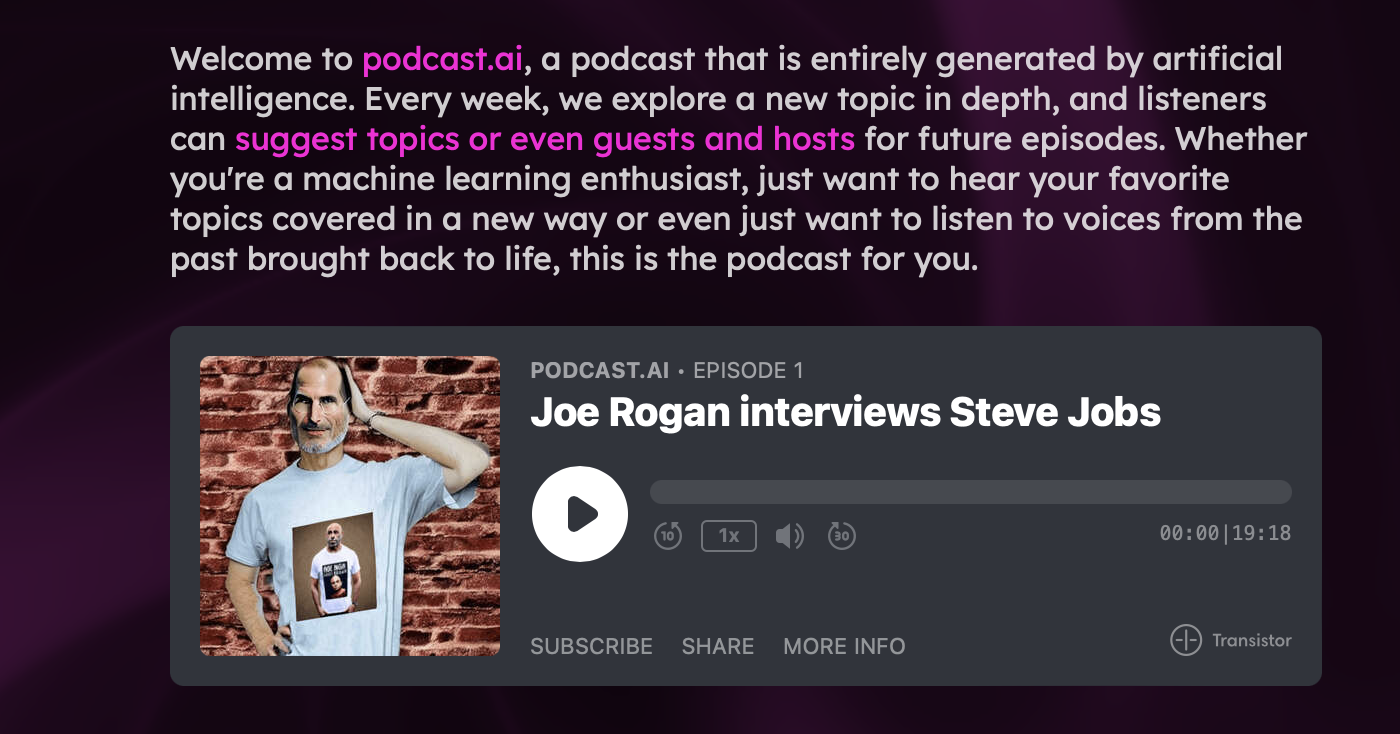

What if you could simulate a podcast conversation between two people, one dead, and one alive? That’s what podcast.ai set up to do when they released a podcast recording between Steve Jobs and Joe Rogan. Listening to the podcast, you can’t help but think that this is exactly what it would’ve sounded like if the two ever got a chance to meet.

We also now have plenty of text-to-voice generators and APIs, for example with Play HT you could vocalize 240k words per month for only $15. Pairing a text-based language model with a text-to-voice generator would likely fool the majority of people that would interact with these models.

Video

Back in 2016, there was a controversy around Elsagate which revealed how easy it was for computer-generated videos to be uploaded and monetized on YouTube. These videos, mostly aimed at children, often featured disturbing or violent content. While many of these videos were quickly taken down, the success of algorithmically generated videos was apparent as some of these videos had views in the millions.

Outside of the realm of fully algorithmically generated videos, we also now have the ability to use AI avatars to speak on our behalf. For example, Yepic.ai allows uses to generate 20mins worth of video content for $29/month with realistic avatars and voices.

Music

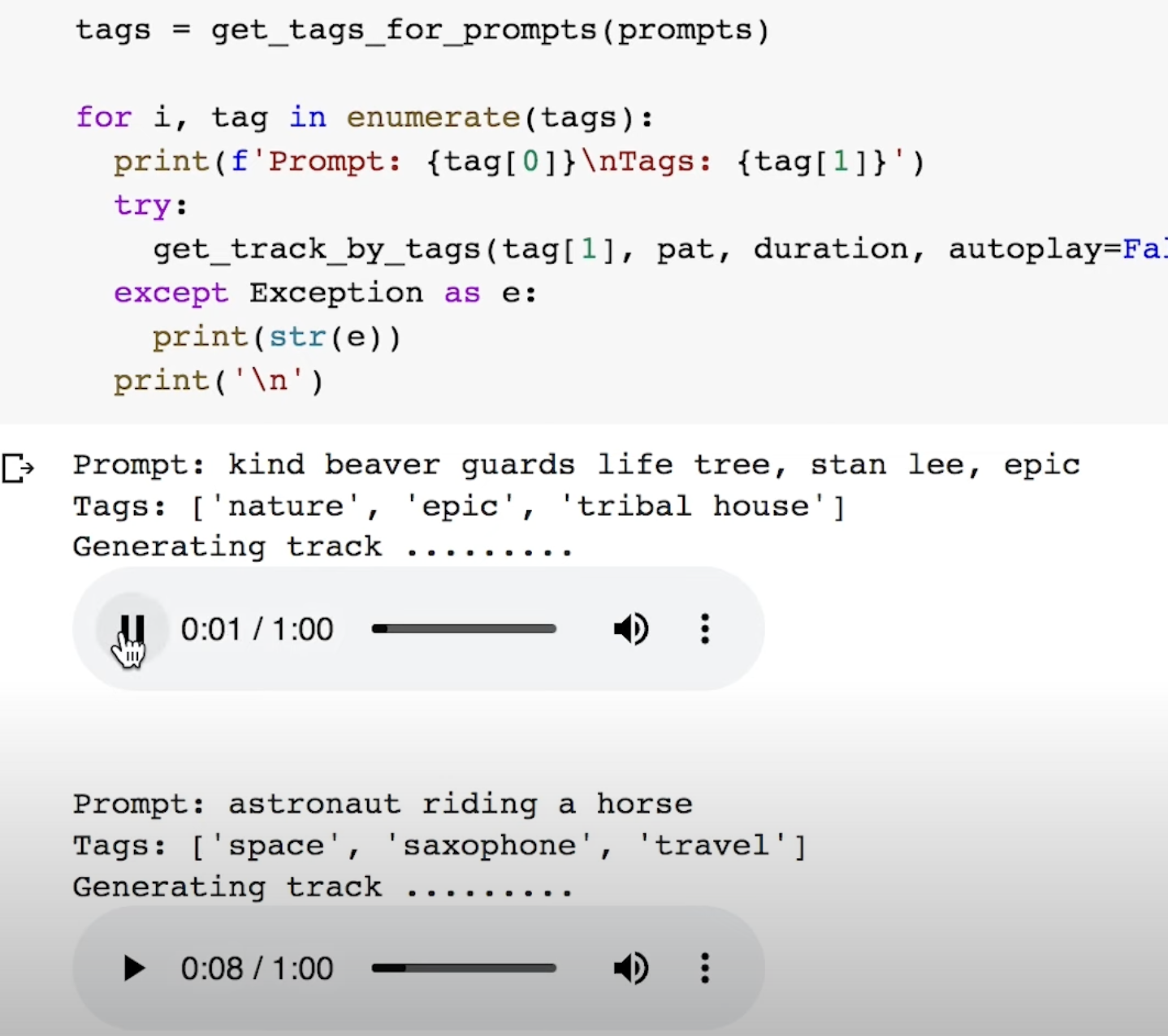

Jukebox by OpenAI was one of the first projects that I came across which had a pretty compelling music style transfer and music generation. Since then, we’ve seen improvements in this domain with things like Dance Diffusion and Mubert.

This could be yet another tool used in an algorithmically generated internet, where music influencers appear human but are actually pumping out lyrics and content meant to shape public perceptions. As we’ve seen throughout history, music has served as a catalyst to many societal changes, and the idea that we have a top 10 song that is algorithmically generated in this decade doesn’t seem too far off.

Mitigating The Fallout

Naturally the question becomes, what do we do about this? It’s clear that the technology is there already, and if you believe that state actors and corporations won’t take advantage of this to advance their goals, then you’re sorely mistaken.

While big tech companies have invested money into finding bots, the incentive to root them out is not always there. If you’re an executive and your bonus is dependent on increasing monthly active users, why would you work on lowering this number?

As a result, our online discourse can now be shaped by a handful of people, who appear to represent millions of accounts.

Return of Local Town Squares

Another alternative to the simulated internet is the return of the local town square. Venice Beach California is a great example of this, walking down the boardwalk you’ll encounter many musicians, merchants and citizens all going about their day. It serves as a natural gathering point, given the proximity to the beach allowing people to connect and relate to one another.

Nextdoor is quickly growing in popularity and serves as a great way to get to know the people who live near you and build a sense of community. While the website is also online, it’s gated by only people who live in your neighborhood making it harder for bots to penetrate.

Also while church attendance and religious adherence has been decreasing steadily over time, we could see a revival of this important gathering space given the societal meaning crisis we’re currently experiencing.

Proof Of Humanity

One of the famous problems with online digital systems is the Sybil attack problem, which is where a person pretends to be multiple people—or equivalently, one person pretends to be many people—in order to gain an advantage within a system.

The answer to the Sybil attack problem is encapsulated in the idea of the Decentralized Identity Trilemma, composed of the following three things:

1) Sybil-resistance (a system where it is hard for one person to have multiple identities)

2) Self-sovereignty (a system where anybody can create and control an identity without the involvement of a centralized third party)

3) Privacy preservation (a system where one can acquire and utilize an identifier without revealing personally-identifying information in the process).

This paper explores various approaches to solving the decentralized identity trilemma but in general, it does seem like there are no clear solutions, merely tradeoffs. Solutions range from voting and vouching, to interpreting data submitted by users.

Worldcoin is one company that’s proposing a Proof-of-Personhood Protocol based on scanning people’s eyeballs with an Orb, in return individuals will receive some cryptocurrency for their troubles.

The flip side of requiring some Proof Of Humanity is that it will have serious privacy implications and may lead to further concentration of power, especially for authoritarian regimes.

However not taking any action against the bot problem, will also have serious implications for how we govern our society. If our discourse is shaped by a few actors who can perform social denial of service attacks, we will be in an even worse state. Entire propaganda operations could be run with a simple command line operation.

It seems like only a matter of time before we see the rise of Simulated Internets - online spaces that are created and managed by AI, with the express purpose of manipulating human behavior. We’ve already seen hints of this with social media bots, but those are just the beginning. Imagine a world where entire websites, apps, and even virtual worlds are designed to influence your emotions, thoughts, and actions.

Unfortunately there are no clear solutions, merely trade-offs that we must make after careful discussion with real people, rather than simulated.